ConTInental, a German auto parts supplier, is developing an augmented reality head-up display system (AR-HUD) designed to provide a new form of dialogue between vehicles and drivers. Let's take a look at how it works.

The display system extends (enhances) the actual traffic situation seen by the driver: the optical projection device accurately places the graphical image containing the traffic condition information into an external display. In this way, AR-HUD can significantly enhance the vehicle's human machine interface (HMI).

AR-HUD has reached the later stage of pre-production development. The demonstration car is used to demonstrate its feasibility on the one hand, and provides valuable reference for subsequent development on the other hand. Continental plans to complete production preparations in 2017.

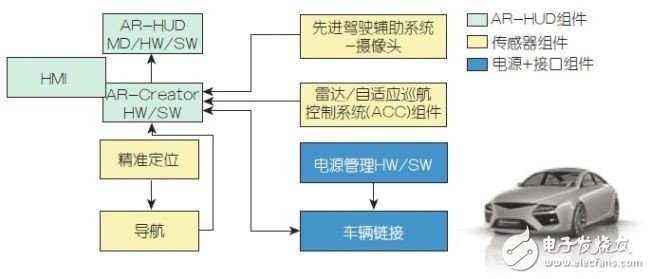

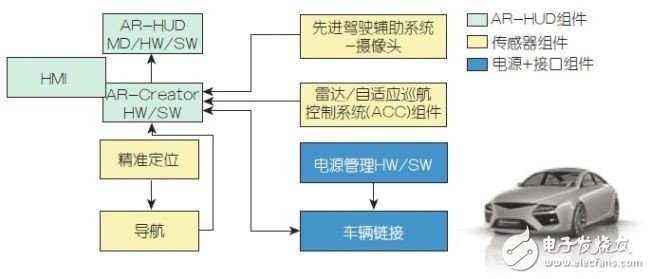

Figure 1: Overview of the augmented reality head-up display system.

AR-HUD: What you see is what you know

The AR-HUD's optical system allows the driver to see an enhanced display of the driver's assistance system status and the weight of this information in its direct field of view. As a new component of the human-machine interface, the pre-production AR-HUD is now tightly integrated with driver assistance system environmental sensors, as well as GPS data, map material and vehicle dynamics.

The radar sensor is integrated in the front bumper and a CMOS single-camera camera (mono-camera) is integrated into the rear view mirror base. The selected Advanced Driver Assistance System (ADAS) supports the first of these AR-HUD applications, such as Adaptive Cruise Control (ACC), road information from the navigation system, and Lane Departure Warning (LDW).

If one of the driver assistance systems detects a related condition, the virtual graphical information displayed by the AR-HUD can alert the driver to the situation. In addition to directly enhancing driving safety, this form of interaction is also a key technology for autonomous driving. This enhanced technology makes it easier for drivers to build trust in new driving functions.

In the AR-HUD of the continent, two projection planes based on different projection distances can be generated, referred to as "near end" (or "state") planes and "distal" (or "enhanced") planes, respectively. The “near-end†state plane projection is displayed at the front of the hood in front of the driver, which selectively displays status information to the driver, such as the current speed, distance limits such as prohibiting overtaking, and speed limit, or adaptive Information such as the current settings of the cruise control system (ACC). The driver can see this information by simply reducing the viewing angle by about 6°. The field of view of the status information is 5° & TImes; 1° (equivalent to 210mm & TImes; 42mm), and the projection distance is 2.4m.

Figure 2: Schematic diagram of the projected light path.

It is equivalent to a virtual image of a "traditional" head-up display based on a mirror optics and image generation unit (PGU). The PGU consists of a thin film transistor (TFT) display, the content of which uses LED backlighting. The PGU is integrated into the upper part of the AR-HUD module in a compact design. Lens optics magnify the display for virtual rendering, which is implemented by curved mirrors. The Continental Group uses the carefully crafted optical design within the AR-HUD to achieve two images generated by different projection distances. Here, the two light paths overlap slightly inside. The near-end optical path uses only the upper edge region of the large AR-HUD mirror (large aspheric mirror), and there is no additional "folding mirror". This part of the AR-HUD system is similar to the advanced technology used by Continental to integrate the second-generation HUD of existing cars.

Vehicle augmented reality display technology with film technology

The enhanced surface naturally plays a leading role in the AR-HUD. It projects the enhanced display symbol directly onto the road at 7.5 meters in front of the driver, and the content is adapted based on current traffic conditions. The content displayed on the far end is generated by a new image generation unit exhibited by Continental for the first time at IAA 2013. Graphical elements are generated by the Digital Micromirror Device (DMD) in the same manner as digital cinema projectors. The core of the PGU is an optical semiconductor structure consisting of hundreds of thousands of micromirror matrices, each of which can be individually biased by an electrostatic field.

The micromirror matrix is ​​rapidly, continuously and alternately illuminated in chronological order by three color LEDs (red, green, blue). A three-color visual aiming (collimaTIon) in parallel is produced by a biasing mirror with a color filter function ("dichroic mirror"). Depending on the color, these special micromirrors either allow light to pass or reflect light. All of the micromirrors that handle the three colors are simultaneously offset by the currently lit color, so they reflect the incident light through the lens and present the color as separate pixels on the focusing screen behind the optical path. This happens simultaneously for all three colors. The human eye "reconciles" the three color pictures on the focus screen and obtains subjective vision of the full color picture.

Looking from the front of the focusing screen, the subsequent light path corresponds to a conventional head-up display, in which: the image is reflected to the second larger lens (AR-HUD) via the focusing screen using the first lens (folding mirror) Mirror). The image is then reflected to the front screen via the AR-HUD mirror. The illuminating surface of the optical system used for this enhancement is approximately one A4 paper size; that is, at the enhanced level, the field of view is equivalent to 10° x 4.8°, and the enhanced viewing angle converted to direct view is approximately 130 cm ( Width) × 63cm (height). The driver only needs to slightly lower his line of sight by 2.4° to see the display surface information. The two image generating units, the near end and the far end, can display different brightness according to the ambient light brightness, and the brightness can reach more than 10,000 cd/m2. Therefore, the display information can be easily seen under almost all ambient light conditions.

The system method used by the AR-HUD on the test vehicle to generate two images at different projection distances has significant advantages. In most traffic conditions, content can be presented at both the far end and the near end. This allows all relevant driving and status information to be displayed in the field of view that the driver can view directly.

AR-Creator data fusion and graphics generation

Numerous simulations and Continental's subject tests show that when the road starts from about 18 to 20 meters in front of the car and continues to about 100m, the driver feels the coolest, of course, Road conditions are related. The AR-Creator control unit must evaluate several sensor data streams to place graphical elements at the exact location of the focus screen, and then through the focus screen, these patterns are accurately reflected into the driver's AR-HUD field of view. This requires a lot of calculations.

AR-Creator combines data from three sources. The single channel camera provides the geometry of the road layout. A mathematical description of how the "Euler spirals" or the curvature of the front lane of the vehicle changes is also taken into account. Based on the radar sensor data and the interpretation of the camera data, the two factors comprehensively determine the size, position and distance of the detectable target in front of the vehicle. Finally, Continental's eHorizon provides a map framework within which to read perceived field data. The eHorizon used in the demo car is static and uses only navigation data. Continental has embarked on the production of subsequent networking and highly dynamic eHorizon products that will support a variety of data sources (ie from car-to-car, traffic control centers, etc.) intended for display on AR-HUD. Once the vehicle dynamics, camera and GPS data are integrated, the location of the vehicle can be displayed on a digital map.

AR-Creator also uses the combined data to calculate: From the driver's position, observe what the geometric layout of the road ahead will be. This is possible because the driver's eye position is known. Before starting to drive, the driver should set the correct position of the "eye box" in the demonstration vehicle. This process can be done automatically by an internal camera of series production. The camera detects the position of the driver's eyes and can track the position of the eye box. The term "eye box" means a rectangular area whose height and width correspond to the theoretical "window". When viewing the road through this window, the driver will only see the full AR-HUD screen. Passengers in the car cannot see the contents displayed by HUD and AR-HUD.

Based on the position of the adjustable eyeglass case, the AR-generator "knows" where the driver's eyes are and from which angle the driver views the road and the surrounding environment. If the secondary system reports a related observation, the corresponding virtual information will appear at the correct point of the AR-HUD.

less is more

Continental has done a lot of research and development for designing virtual information. After many design studies and real-life tests, the Continental Group followed the principle of “less is more†when it developed AR-HUD. Developers want to present only the minimum necessary information to the driver to avoid obstructing real road conditions.

For example, as a navigation aid, you can choose whether to "hide" the corner arrow on the road. When turning, the angle arrows can stand upright and point in the direction of the intended turn, they look like a direction sign. This design makes it possible to give virtual information in a narrow curve, although in this case, true enhancement is impossible due to the lack of perspective visual range.

Be cautious about the behavior of overpassing the lane. If the driver is likely to be unintentional to press the lane, the AR-HUD of the demonstration vehicle only highlights the lane boundaries.

In the future, if eHorizon receives the accident information in advance, the hazard symbol with the corresponding high attention value will appear in the driver's field of view sufficiently fully. Once this new form of interaction with the driver is installed, HMI has many options to proactively provide drivers with forward-looking information on the content of the scene. The vehicle and the driver will start to “talk,†even if it is a silent conversation.

3 Mm /8 Mm Nano Tip,Electronic Board Marker Pen,Touch Board Marker Pens,Infared Smart Board Marker

Shenzhen Ruidian Technology CO., Ltd , https://www.wisonens.com