What is the hottest thing in the technology field now? Artificial intelligence, machine learning, and deep neural networks are clearly hot spots in hotspots. Too many of the possibilities we know are subverted by powerful machine learning and artificial intelligence.

For example, last year's AlphaGo of Deepmind defeated world champion Li Shishi in the field of Go, which made people feel incredible. The software before AlphaGo can't win even amateur six-segment masters, let alone top professional players! If you are a programmer and have an idea, can you play machine learning at home? A little bit more research, I found that this is not the case at all.

The author has a friend's child who wants to make a difference in Go. He found that the extracurricular tutoring class of Go is going to cost money, and it takes money to find someone to guide the game, and as the child's chess power rises, it increases at the price of geometric grades. He also heard that the artificial intelligence of Go is already very powerful. Can you make a one-time investment and get a very good software and configuration of Go, which is equivalent to always playing chess with a master, which is good for chess growth. .

4-way TItan X, 2-way Xeon E5 is already very strong...

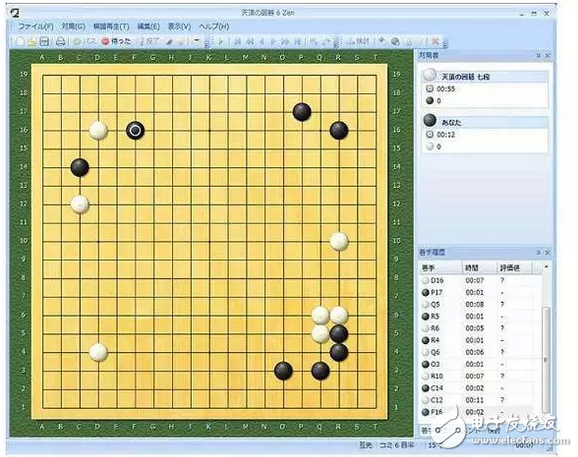

I think, this logic also makes sense, AlphaGo has not won the human master and humans are playing AlphaGo chess? So I looked for it. Currently, I use the 6700K processor computer to run the Japanese Go software Zen, which can have amateur five or six paragraphs, but this is obviously not what he wants. The deepzen that played against Zhao Zhixun a few days ago has a much higher configuration. The core is two Xeon E5 V4 and four TItan X GPU calculations. Although it is not worthy of Zhao Zhixun, it shows a considerable amount of chess.

A few days ago, the Deepmind team released the news again, and the chess power rose sharply. It began to play chess again early next year. I have a whimsy. Anyway, this old man is not bad. If the Google team unveiled the software, would you be able to get a stand-alone version of AlphaGo? Every day, and the AI ​​that is more powerful than Li Shishi, Go is definitely faster. But a careful study, this is not the case, the processor used by AlphaGo, buy! Do not! To!

Google artificial intelligence processor TPU

This table is the configuration and chess power estimate given by Google when it published the journal Nature. At that time, I only said how much CPU and GPU and how much chess. If a CPU corresponds to a core, the current Xeon E5V4, there are already 22 core products, and if it is a CPU, then 48 CPUs may be very expensive blade service, of course, there is a versatile Taobao, the second-hand rack-type blade server is also less expensive.

The configuration of the Deepmind team announced at the time, it seems that the stand-alone version of AlphaGo is not out of reach, and the chess is enough. In May of this year, Google announced the details of its own customized processor. Google is not using Intel or AMD processors, but its own processor optimized for machine learning, and named it "tensorflow process unit (TPU). AlphaGo is built on the TPU.

The role of the TPU is to accelerate the neural network for machine learning. Google has no specific information on how the TPU works and what instructions are available. As an outsider, the information available is limited. Even no semiconductor factory is known. Google said that TPU is a kind of auxiliary computing tool, or it needs to have CPU and GPU. The core is that the TPU is 8-bit, and our processor is 64-bit. Therefore, in neural network computing, the computing power of the TPU's unit power consumption is much better than the traditional CPU, and it is more suitable for large areas. Distributed computing.

IBM's computational start-up for computer-simulated nerves may be earlier than other companies. The origin of the research cannot be said to be completely autonomous, but there are external factors, that is, DARPA (Research Department of the Ministry of Defense Advanced Planning Research Institute).

IBM studies the neural computations that mimic the brain, also from DARPA. Since 2008, DARPA has given IBM $53 million to study SyNapse (Systems of Neuromorphic AdapTIve PlasTIc Scalable Electronics, the acronym for SyNapse, which is exactly the part of the synapse, the nerve), and TrueNorth is just this Part of the project's results.

IBM's TrueNorth chip

The significance of this research is that today's computers, processing and storage are separate and highly dependent on the bus for data transfer, the so-called von Neumann system. Our brains are treated differently. We must know that the nerves are not transmitted at a fast speed, but the brain's advantage is that there are many brain cells, relying on a large amount of distributed processing. The existing method of imitating the brain depends on the number of processors. So the energy efficiency ratio is not high, and IBM's TrueNorth is to break this barrier and complete the simulation of neurons on the chip. The 2011 prototype has a prototype of 256 neurons.

In 2014, IBM's programmable neurons reached 4096, with programmable taps of 256 million and combined with 4096 joint neural processors. It can be said that IBM's TrueNorth is unintentional, the research started early, the industry deep learning heat, neural network hot, this processor is also mature, on the IBM and DARPA website, have given this chip for video content It is very interesting to identify the results of the calculations and you can go and see.

Microsoft FPGA

Microsoft started Project Catapult in 2012. At the time, Microsoft’s helm was Steve Ballmer. Microsoft found that hardware providers were unable to provide the hardware they needed. In the past, Microsoft spent billions of dollars each year to purchase hardware, but the existing hardware is machine-learning for these search algorithms.

When the Bitcoin mining machine was used, the FPGA showed more efficient and power-saving features than the graphics card.

Microsoft's route is through FPGA (field programmable gate arrays), which features parallel computing. Remember the bitcoin frenzy a few years ago? At first, people relied on graphics cards or GPUs. Later, when the mining machine appeared, it was the ability to use the FPGA to mine. At that time, crazy people dug the first bucket of gold.

Run Bing page ranking service, FPGA assist and no assistance comparison

Microsoft's FPGAs have been applied to areas such as the Bing search engine, which can significantly improve efficiency. Although FPGA is difficult to program, Microsoft is trying to add more applications, such as Office365, to improve the quality of service through FPGAs. Microsoft's FPGAs come from Altera. Interestingly, Intel bought the company for $16.7 billion, the largest acquisition in Intel's history.

Intel: R&D and acquisitions go hand in hand

As we said before, Microsoft and Intel are long-term partners. Microsoft's FPGAs use technology from Altera. Intel acquired the FPGA and SoC companies. Now, visit Altera's official website, which is already familiar to us. The logo is added to the FPGA, and the content is also popular content such as machine learning and autopilot.

Of course, Intel is a leader in the field of processors and semiconductors, and its observation of the industry is much more thorough than others. Although most of its products come from traditional CPUs, Intel has core products and is competitive in the field of machine learning. That is the Xeon phi-core processor and the Xeon phi coprocessor.

The order of development is such that Intel's existing Xeon phi coprocessor is used to speed up the calculations, after the launch of the previous phi-throat processor. The fusion processor uses Intel OPA to change the energy efficiency and space efficiency of high performance computing. In Intel's official news, we also saw Intel's speed performance in Xeon phi's accelerated machine learning. Of course, Intel has not stopped the pace of acquisitions, and many companies in the field of artificial intelligence are being pocketed by Intel.

If I want to work in this field?

As the hottest field, the words artificial intelligence, machine learning, and deep neural networks are seen in many media, and basically the salary + stocks issued by enterprises are very attractive. It can be said that people can be in a state of grabbing and the price is high. Remember AlphaGo's Deepmind? They also have recruitment notices recently.

From the information of recruitment, if you want to become a research scientist of Deepmind, generally have a doctoral (PhD) title in the field of neurology, computer science and a reliable paper, they will consider it, of course, other positions may require lower.

Although it is hot now, in fact, the deep neural network system is not too much. Therefore, it is not so easy to pay high-paying and engage in cutting-edge research. In the readership of this article, there must be college students, more reading, hard work, and examination. The world's leading universities in the field of computer, neurology and other fields are shortcuts in the fields of machine learning, deep neural networks, artificial intelligence, and we can see that the hardware optimized for machine learning is just on the road, and the future is very long. In this time, this field will continue to be hot, because there are not many deep neural network systems in the world.

The destiny of a person must of course rely on self-strengthening, but also on the course of history. And history has just opened the window of artificial intelligence, there is still a long way to go from the temple inside. This historical opportunity is already in front of you, and it is the time for self struggle to change the fate, teenagers.

The problem of returning to the title of this article, in fact, today's machine learning, artificial intelligence, the most is the architecture of Xeon + GPU, but the custom TPU, FPGA for co-processing, with half the effort efficiency. The author personally believes that the current form is probably not the most efficient way. In the future, the brain of the machine may be really smarter than us.

4 Outlets EU Socket,EU Power Socket USB,EU Power Socket

Dongguan baiyou electronic co.,ltd , https://www.dgbaiyou.com